📬 Remix Reality Insider: CES 2026 and the Rise of Physical AI

Your weekly briefing on the systems, machines, and forces reshaping reality.

🛰️ The Signal

This week’s defining shift.

CES 2026 confirmed that this is the year of Physical AI.

For more than a decade, AI has lived mostly in the digital world. In 2026, that changes.

This year’s CES was not about smarter software. It was about intelligent machines moving into real environments, with the sensing and compute needed to operate continuously in the physical world. Autonomy, robotics, perception, and spatial interfaces are now being built for everyday use.

The message was consistent across the show floor: AI is no longer staying on screens. It is moving into the spaces where we live and work.

This week’s news surfaced signals like these:

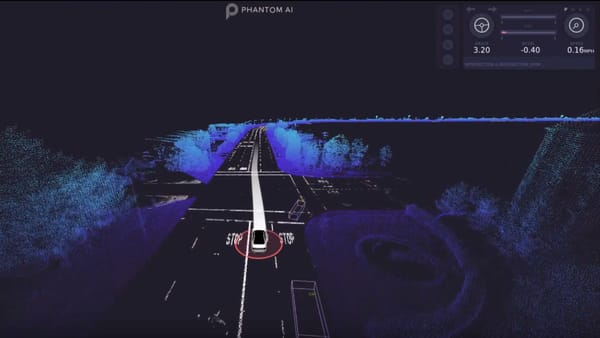

- Machines are learning to see in the real world: Teradar’s lidar expands what machines can see in fog, rain, snow, and glare, while Hesai doubling lidar output shows how fast advanced perception is scaling. Reliable vision is the baseline for physical AI.

- Autonomous mobility is entering its operating phase: Lucid, Nuro, and Uber’s production robotaxi, already in supervised road testing, reflects the shift from concept vehicles to transportation systems that must perform daily, at scale.

- Physical AI stacks are consolidating: Mobileye’s $900M acquisition of Mentee Robotics unifies autonomous driving and humanoid robotics under a shared platform of training, simulation, hardware, and safety. This lets improvements in one machine carry over to many others.

- AI companions are returning as hardware: Razer’s Project AVA, Plaud’s NotePin, and Zeroth’s consumer robots show a new class of on-device assistants that see, listen, and act within human spaces.

- Deployment pipelines are locking in: Caterpillar’s expanded NVIDIA partnership, Kodiak’s deal with Bosch, and telecom-backed funding for RayNeo’s AR glasses show how Physical AI is now flowing through manufacturing, logistics, and distribution networks.

Why this matters: CES 2026 made it clear that the Physical AI era is beginning. This is the year AI starts showing up in real spaces and everyday life. What we are seeing now is only the first phase of how machines will operate in homes, vehicles, workplaces, and cities.

🧠 Reality Decoded

Your premium deep dive.

In this week's editorial, Catherine Henry decodes the scale of the humanoid robot market and the forces shaping its development.

Three points stood out:

1. The humanoid robot market is becoming massive and unavoidable: Analysts now project humanoid robotics as a multi-trillion-dollar industry over the coming decades, with up to a billion robots eventually in use worldwide. This is no longer a niche technology story but rather a major new industrial sector.

2. The race is being won through full-stack execution, not single breakthroughs: Progress in humanoid robotics depends on aligning AI, hardware, manufacturing, sensors, capital, and supply chains into one continuous system. The ecosystems that can coordinate all of these layers at once are the ones moving fastest.

3. Scale creates its own momentum: As robots are deployed, they generate real-world data. That data improves the systems. Better systems enable broader deployment. This feedback loop is becoming the primary engine of progress in Physical AI.

Key Takeaway:

Humanoid robotics is no longer about whether the technology works. It is about who can build it, deploy it, improve it, and make it sustainable in the real world.

📡 Weekly Radar

Your weekly scan across the spatial computing stack.

🐝 Amazon Deepens Physical AI Push With Ring Sensors and Bee Assistant Features

- Ring introduces a new generation of smart sensors built on Amazon Sidewalk, along with Fire Watch alerts and an in-app store for third-party tools.

- Why this matters: From the front door to the wrist, Amazon is building systems that stay aware and respond in real time. These updates point to a future where devices manage more routine tasks without needing constant input.

🚗 Lucid, Nuro, and Uber Unveil Production Robotaxi at CES With On-Road Testing Underway

- Lucid, Nuro, and Uber revealed their production-intent robotaxi at CES 2026, built on the Lucid Gravity SUV.

- Why this matters: The Bay Area rollout is the first real test of how Lucid, Nuro, and Uber plan to take this robotaxi from concept to commercial service. The December road testing shows they’re moving beyond showroom demos.

🧠 NVIDIA Releases Alpamayo, its “ChatGPT Moment” for Autonomous Vehicles

- Alpamayo is positioned as a full-stack autonomy platform for developing, testing, and deploying reasoning-based autonomous vehicle systems.

- Why this matters: NVIDIA is laying out a full development setup for AVs, from models to data to simulation. It gives companies a faster way to build safer systems that can handle the kinds of edge cases that stall real-world deployment.

🤖 Mobileye Acquires Mentee Robotics to Expand Physical AI Capabilities

- Mobileye will acquire Mentee Robotics for $900 million to unify core AI systems across autonomous driving and humanoid robotics.

- Why this matters: At their core, humanoid robots and autonomous vehicles face the same challenge: acting safely and intelligently in a human-centered world. Unifying the tech stack across both makes strategic and technical sense.

🚙 Meta Pairs with Garmin for EMG In-Car Controls, Pauses Global Glasses Rollout

- Meta showcased Neural Band integration with Garmin’s in-car system, enabling gesture-based control without glasses.

- Why this matters: The Neural Band is becoming the backbone of Meta’s input strategy, more than an accessory for smartglasses, acting as a bridge across screens, environments, and devices.

🕶️ RayNeo Secures Strategic Funding, Debuts First eSIM-Enabled AR Glasses

- China’s top telecom operators invested in RayNeo, marking their first move into consumer AR as part of a national infrastructure strategy.

- Why this matters: Telecom backing matters because it turns AR glasses from niche tech into a mass-market product. Carriers bring retail reach, billing plans, and the network access needed to make these devices truly standalone.

👤 Meet the Desktop Hologram That Watches, Listens, and Talks Back

- AVA transitions from a screen-based esports coach to full-featured holographic assistant with contextual and task-based support.

- Why this matters: Razer is giving the AI assistant a physical form. Project AVA puts a holographic character on your desk, making digital help feel present, visible, and personal.

🏗️ Caterpillar Expands NVIDIA Partnership to Power Autonomy and Virtual Manufacturing

- Caterpillar is expanding its partnership with NVIDIA to bring AI to its machines, operator systems, and manufacturing operations.

- Why this matters: Caterpillar is building its physical operations on top of NVIDIA’s full AI stack. Autonomy, voice control, and factory simulation now run on the same integrated platform.

📷 Teradar Claims Terahertz Sensor Could Prevent 150,000 Road Deaths Annually

- Teradar introduced Summit, a long-range sensor designed to outperform radar and lidar in all conditions.

- Why this matters: ADAS and autonomous systems only work if vehicles can see clearly in all conditions. Teradar’s terahertz sensor is a step toward solving that, offering consistent, high-resolution vision where radar and lidar often fall short.

📸 Hesai to Double Annual Lidar Output to 4 Million Units in 2026

- Hesai will increase its annual lidar production capacity from 2 million to over 4 million units in 2026.

- Why this matters: Hesai doubling output signals that lidar demand is growing fast and spreading across industries as spatial computing goes mainstream.

🧱 LEGO Launches SMART Play With Sensor-Packed Bricks for Interactive, Screen-Free Play

- LEGO Smart Play uses built-in sensors to make brick creations respond during play.

- Why this matters: Sensors underpin the next wave of computing, waking up static things to give them the ability to do more things. Smart Play shows LEGO moving toward toys that can sense and respond during play. It points to a future where physical play is enhanced by simple, built-in tech, without the need for more screens.

🌀 Tom's Take

Unfiltered POV from the editor-in-chief.

This week I could not stop thinking about something after watching LG’s demo of its new humanoid robot, CLOiD. And I wasn't alone.

LG used CES 2026 to present its vision of a "zero-labor household" and on paper this is a dream come true. A robot that does your laundry, folds your clothes, and frees up your time! It's basically Rosie from The Jetsons, finally showing up in real life.

And yet I watched the demo and my first reaction was....why is it so slow?

The robot carefully picked up a shirt, moved it, adjusted it, folded it, and repeated. The whole thing happened at the speed of a turtle. My Type A personality immediately imagined myself grabbing the shirt out of the robot’s hands and just doing it myself.

When I shared the video, a lot of people messaged me the same thing. The tech is amazing. The execution feels painfully slow.

I know it is early. I know it will improve. But it raised a deeper question for me. Why are we so focused on building humanoid robots for tasks that do not actually require a human form?

If the goal is folded laundry, wouldn't it be better to have your dryer upgraded so that it adds a new step to fold your clothes in minutes, rather than a human-shaped machine that takes hours to do what we can do in ten?

Perhaps the fastest path forward is not copying ourselves but rethinking the problem entirely.

Humanoid robots are coming, and I believe in that future. But for everyday life, the real win may come from machines that don't look like us at all, just solve the problem better.

🔮 What’s Next

3 signals pointing to what’s coming next.

- Gaming continues to push immersive interfaces forward

XREAL and ASUS ROG pushing AR glasses to 240Hz for competitive gaming, alongside Razer’s evolution of Project AVA into a holographic desktop companion, shows how gaming companies continue to lead the development of new spatial interfaces. - Perception hardware is entering its scale era

Teradar’s new terahertz sensor opens a fresh sensing domain for vehicles, while Hesai doubling lidar production to over 4 million units shows how fast demand for machine perception is accelerating. One company is pushing the frontier of what machines can see. The other is building the capacity to ship perception at industrial scale. Perception is no longer the bottleneck. It is becoming reliable and widely available. - Humanoid robots move from vision to operations

Boston Dynamics deploying Atlas for the enterprise with Hyundai as a first customer and LG introducing CLOiD for the home shows that humanoid robots are entering real environments with real customers and real jobs to do. Factories and living rooms are now where this technology gets tested and improved.

Know someone who should be following the signal? Send them to remixreality.com to sign up for our free weekly newsletter.

📬 Make sure you never miss an issue! If you’re using Gmail, drag this email into your Primary tab so Remix Reality doesn’t get lost in Promotions. On mobile, tap the three dots and hit “Move to > Primary.” That’s it!

🛠️ This newsletter uses a human-led, AI-assisted workflow, with all final decisions made by editors.