mimic-video Uses Pretrained Video Models to Improve Robot Learning Efficiency by 10x

- mimic-video is a video-action model that reduces data needs for robot learning.

- Tests showed mimic-video learns tasks with 10x less data and converges twice as fast as typical VLA models.

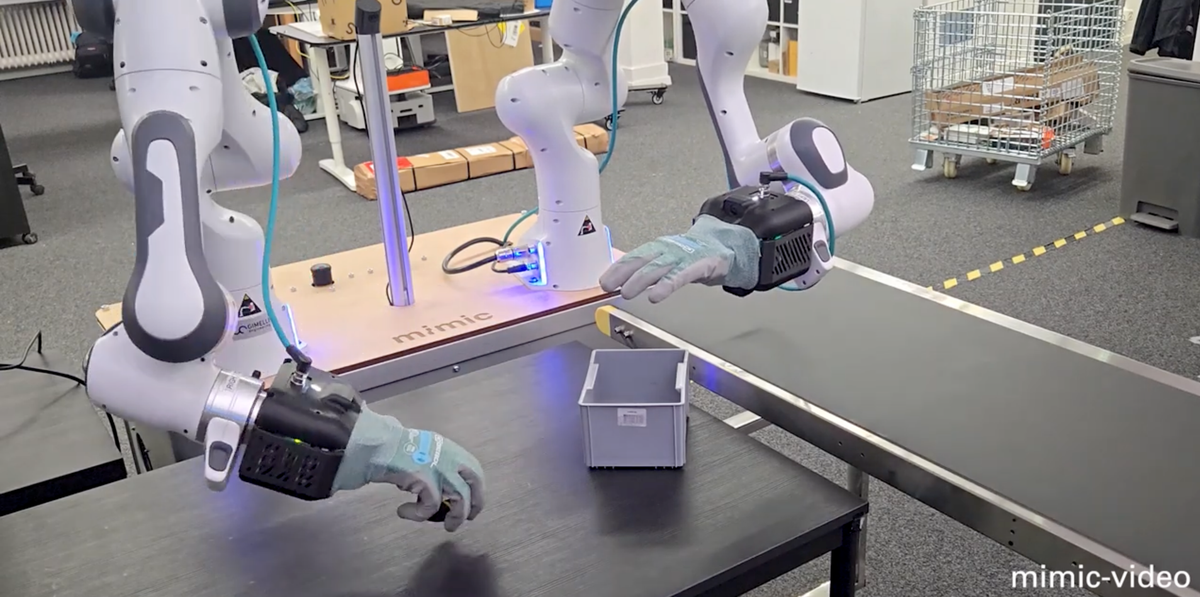

mimic-video is a new robot control system from teams at mimic robotics, Microsoft Zurich, ETH Zurich, ETH AI Center, and UC Berkeley. The team says the video-action model (VAM) helps robots learn faster and with less training data, particularly by reducing the need for large-scale teleoperation compared to traditional vision-to-language-action (VLA) models.

Source: YouTube / mimic

mimic-video uses a pretrained video model, NVIDIA Cosmos-Predict2, trained to understand how scenes change over time. That output becomes a visual plan. This is then used with a smaller action decoder that turns it into robot movements. The video and action parts are trained on separate schedules, so each can be optimized independently. This approach differs from traditional VLA models, which rely on vision-language models (VLMs) trained on static image and text data, and typically require large amounts of teleoperation data for finetuning.

The model was evaluated in both simulation and on real robots, including dual-arm systems and dexterous humanoid hands. In these tests, it learned with 10x less data and converged about twice as fast as standard VLA baselines. Ground-truth video inputs led to higher success rates, indicating that control performance depends on video model quality.

🌀 Tom’s Take:

mimic-video uses video models to simplify robot learning, showing that visual prediction can reduce the need for large teleoperation datasets.