Helm.ai Cuts Data Requirement for Urban Autonomy to Just 1,000 Hours

- Helm.ai’s new AI system successfully navigated urban roads it had never seen before using minimal real-world training.

- The approach bypasses traditional data requirements by simulating geometry and behavior instead of learning from pixels alone.

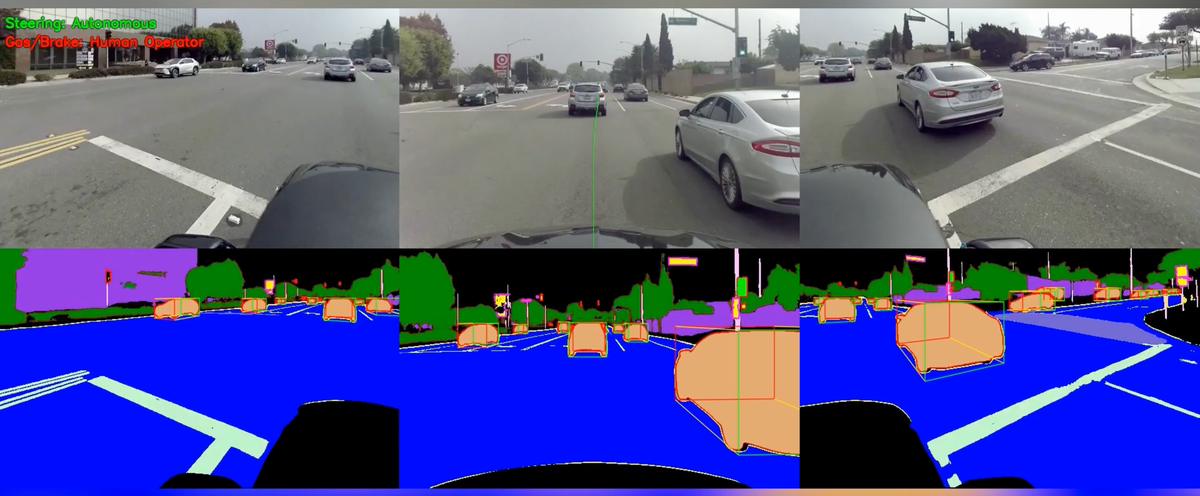

Helm.ai, a company building full-stack driving software for self-driving, has introduced a new AI architecture that can autonomously steer through complex city environments it has never encountered before, using only 1,000 hours of real-world driving data. In a recent demonstration, the company’s vision-only AI driver system handled lane keeping, turns, and lane changes across a 20-minute drive in Torrance, CA, without disengaging once.

This performance is enabled by Helm.ai’s new “Factored Embodied AI” framework, which it says departs from end-to-end models that rely on massive real-world datasets. Instead, the system trains in “semantic space”, a geometric and logical abstraction of the world, rather than learning directly from pixels. This allows the company to simulate road structure and behavior at scale, then fine-tune in the real world using only 1,000 hours of driving data.

"The autonomous driving industry is hitting a point of diminishing returns. As models get better, the data required to improve them becomes exponentially rarer and more expensive to collect," said Vladislav Voroninski, CEO and Founder of Helm.ai, in a press release. "We are breaking this 'Data Wall' by factoring the driving task. Instead of trying to learn physics from raw, noisy pixels, our Geometric Reasoning Engine extracts the clean 3D structure of the world first. This allows us to train the vehicle's decision-making logic in simulation with unprecedented efficiency, mimicking how a human teenager learns to drive in weeks rather than years."

Helm.ai also tested the system in an open-pit mine, where it correctly identified drivable areas and obstacles in a non-road setting. The framework enables automakers to develop advanced driving features without depending on large-scale data collection from production fleets.

🌀 Tom’s Take:

Helm’s semantic simulation shows that training in abstracted geometry beats brute-force data collection.

Source: Business Wire / Helm.ai