ByteDance Releases Depth Anything 3, a Simpler Way to Reconstruct 3D Scenes from Images or Video

- ByteDance Seed Team released Depth Anything 3, a vision model that reconstructs 3D scenes from single images, multi-view inputs, or video.

- According to the research team, the model sets new benchmarks in pose estimation and depth accuracy.

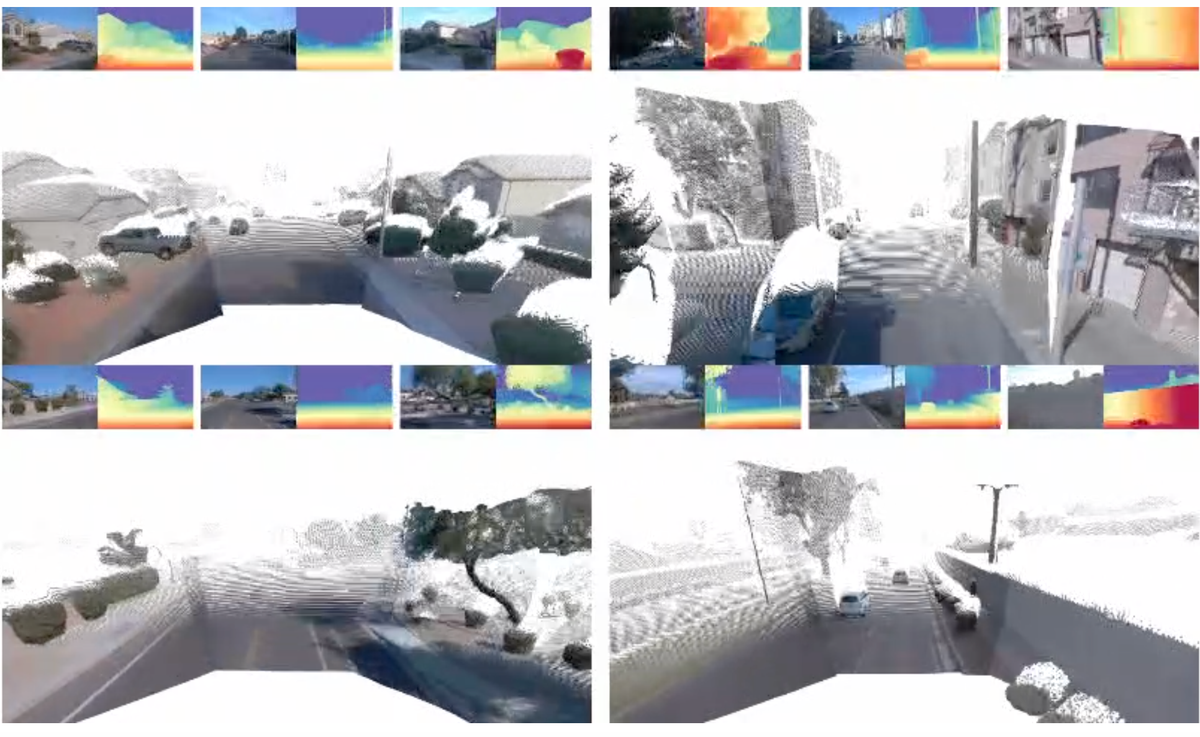

Depth Anything 3 is a new vision model from the ByteDance Seed Team, founded in 2023 to build advanced AI foundation models. The model reconstructs 3D scenes from visual input, including single images, a set of images, or video. The team behind the project recently released its open-source tools via the project site, GitHub, and Hugging Face, along with an interactive demo and technical report.

The model is built on a standard image processing system trained to understand the shape and depth of a scene. It learns by predicting how far things are based on how they appear, using a method called depth-ray prediction. While the design is simpler than previous approaches, it still produces accurate 3D results, supported by a training process where one version of the model teaches another.

Source: ByteDance Seed Team / Github

The research team reports that Depth Anything 3 outperforms both its predecessor, Depth Anything 2, and VGGT, the previous state-of-the-art model, on their internal benchmark for pose estimation and geometric accuracy. On its project page, the team highlights applications of this model in 3D design, robotics, and immersive media, and has made tools available for testing and integration, with support for exporting results in formats like .glb, .ply, and 3D Gaussian splats.

🌀 Tom’s Take:

By releasing open-source tools, the team is turning the model into more than just a paper, letting developers put it into practice for robotics, 3D design, or VR projects with minimal setup.

Source: Depth Anything 3 Project Page