Apple Brings Live Captions and Visual Assistance to Vision Pro and Apple Watch

- Vision Pro gains camera magnification, VoiceOver support, and a new API enabling visual assistance apps.

- Apple Watch introduces Live Captions integrated with iPhone’s Live Listen feature for users who are deaf or hard of hearing.

Apple has announced new accessibility features for its Vision Pro headset and Apple Watch. Users can magnify their surroundings on Vision Pro using the device’s front-facing camera. The headset will also add VoiceOver, describing on-screen content and visual elements.

Source: Apple Newsroom

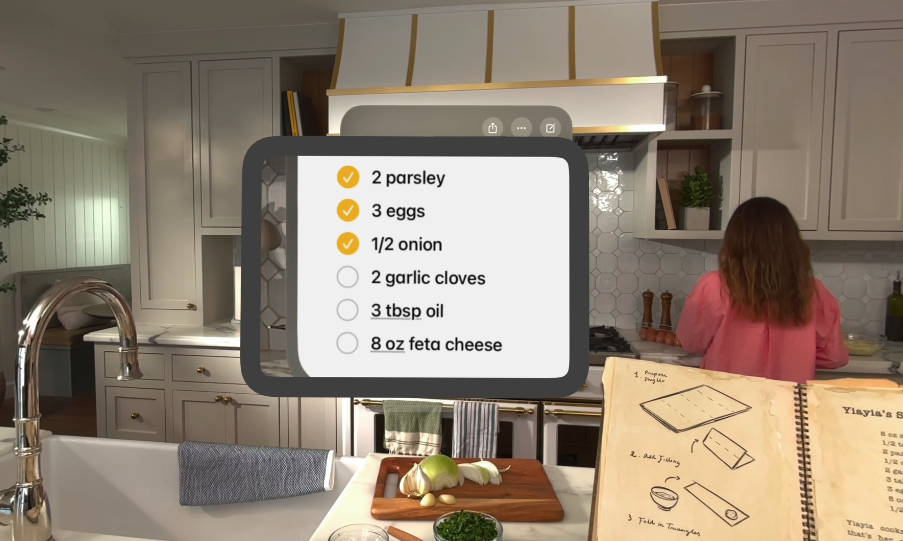

Apple is also introducing a developer API for Vision Pro that enables apps to build new accessibility features using the headset’s camera. Apple gives examples such as helping blind or low vision users navigate their environment or read documents.

Apple Watch is gaining Live Captions for the first time. When paired with an iPhone using Live Listen, the watch can display transcribed audio captured by the phone. Live Listen sessions can be started, stopped, or resumed directly from the watch, allowing for use in everyday settings like meetings or classrooms.

🌀 Tom's Take:

Wearable technology lends itself well to advancing accessibility, given its access to more of our body's inputs. While immersive interfaces have proven to be great entertainment and productivity devices, the most powerful feature may just be making it easier for everyone to interact with the world on their own terms.

Source: Apple