World Labs Debuts RTFM, a Real-Time Generative World Model

- RTFM is a real-time generative World Model that creates interactive 3D video from a single image or video input.

- Runs in real time on a single H100 GPU and supports persistent exploration of both generated 3D scenes and real-world locations.

World Labs has released a research preview of its RTFM (Real-Time Frame Model), its new real-time generative World Model. The system generates interactive video that enables users to explore persistent 3D worlds and real-time environments. RTFM runs in the browser on today’s hardware and is built to scale as compute improves.

Instead of building 3D scenes from detailed geometry like triangles or point clouds, RTFM uses generative video modeling to create new views directly from images or video. It’s trained on large amounts of video and learns how to render things like lighting and reflections just by watching. It also handles both reconstruction and generation in a single model. To keep the world persistent over time, each frame includes its position and angle in space. This gives the model a kind of spatial memory, so it can remember where things are and generate new views without needing to build a full 3D model.

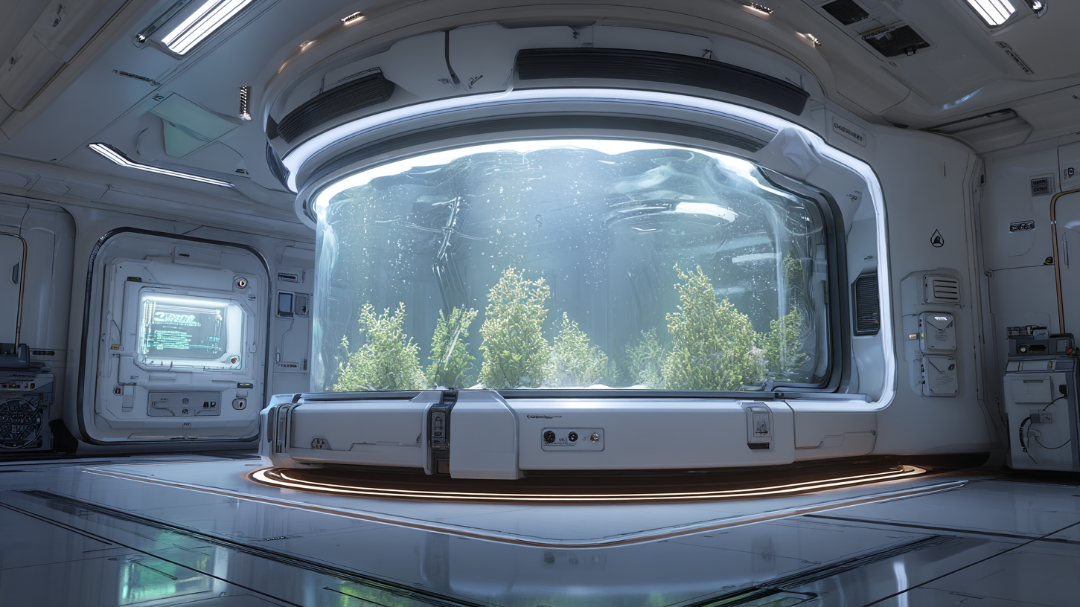

Source: World Labs

In a blog post announcing the new model, World Labs points out that generative world models usually need to process a massive amount of data very quickly, which makes them hard to run efficiently. One of the main goals with RTFM was to get it running on today’s hardware, specifically, a single H100 GPU. The team tuned every part of the system to achieve real-time performance without sacrificing scale. They note that as compute gets cheaper and more powerful, bigger versions of RTFM could open up even more detailed and interactive worlds.

A demo of RTFM in action can be viewed here.

🌀 Tom’s Take:

RTFM moves away from scanned geometry like Gaussian splats and photogrammetry, using interactive video to generate worlds on the fly. It’s a different approach to world modeling that opens up opportunities to create persistent, interactive worlds without ever scanning them. This is helpful, especially for worlds that do not exist.

Source: World Labs