NVIDIA Releases Alpamayo, its “ChatGPT Moment” for Autonomous Vehicles

- Alpamayo is positioned as a full-stack autonomy platform for developing, testing, and deploying reasoning-based autonomous vehicle systems.

- Industry partners including Lucid, JLR, Uber, and Berkeley DeepDrive are exploring using Alpamayo to develop reasoning-based systems for Level 4 autonomy.

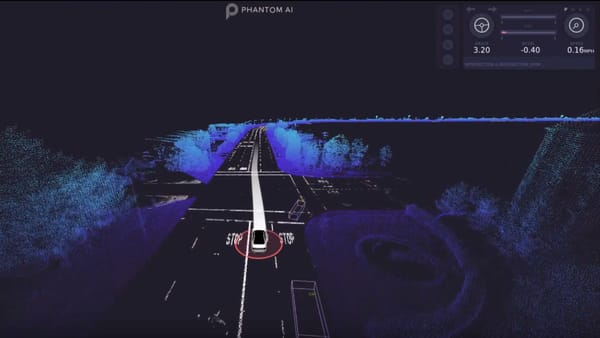

At CES, NVIDIA announced Alpamayo as a complete open platform for building autonomous vehicles that can handle rare and unpredictable driving situations. The release brings together everything needed to build and test AV systems including an AI model that reasons step by step, simulation tools to run virtual road tests, and large datasets for training. While NVIDIA had previously introduced the Alpamayo reasoning model in a research context, CES marks the first time it is being released as part of a full open development stack, including simulation tools and datasets.

“The ChatGPT moment for physical AI is here — when machines begin to understand, reason and act in the real world,” said Jensen Huang, founder and CEO of NVIDIA, in an official news release. “Robotaxis are among the first to benefit. Alpamayo brings reasoning to autonomous vehicles, allowing them to think through rare scenarios, drive safely in complex environments and explain their driving decisions — it’s the foundation for safe, scalable autonomy.”

Source: YouTube / NVIDIA

The core model, Alpamayo 1, is a vision-language-action (VLA) model that uses video input to generate driving decisions along with step-by-step reasoning traces that explain each choice. With a 10-billion-parameter architecture, it’s designed as a large-scale “teacher” model, developers can distill it into smaller runtime systems or use it to build tools like auto-labelers and evaluators. Alpamayo is built to tackle long-tail driving scenarios that fall outside standard training data, with a focus on reasoning through rare or complex edge cases. Supporting the model are AlpaSim, an open-source simulation engine with realistic sensor and traffic modeling, and the Physical AI Open Datasets, which provide over 1,700 hours of diverse driving footage from across geographies and conditions. All three components are open and available now.

Partners like Lucid, JLR, and Uber are exploring Alpamayo to support their Level 4 autonomy efforts. Lucid is using the platform’s simulation tools, datasets, and reasoning models to improve decision-making in complex environments. JLR sees the open-source release as a catalyst for more responsible and collaborative development across the AV ecosystem. For Uber, Alpamayo offers new ways to address long-tail edge cases, one of the core challenges in deployment. With early adoption underway, NVIDIA is positioning Alpamayo as a foundation developers can use to train, test, and scale reasoning-based AV systems.

🌀 Tom’s Take:

NVIDIA is laying out a full development setup for AVs, from models to data to simulation. It gives companies a faster way to build safer systems that can handle the kinds of edge cases that stall real-world deployment.

Source: NVIDIA