Microsoft Unveils Rho-alpha to Bridge Language, Vision, and Touch in Robotics

- Rho-alpha is a new VLA model from Microsoft that combines language, vision, and touch to help robots follow natural instructions and adapt in real time.

- It’s trained using a mix of physical demonstrations and simulation with Isaac Sim, and is now being tested on dual-arm and humanoid robots.

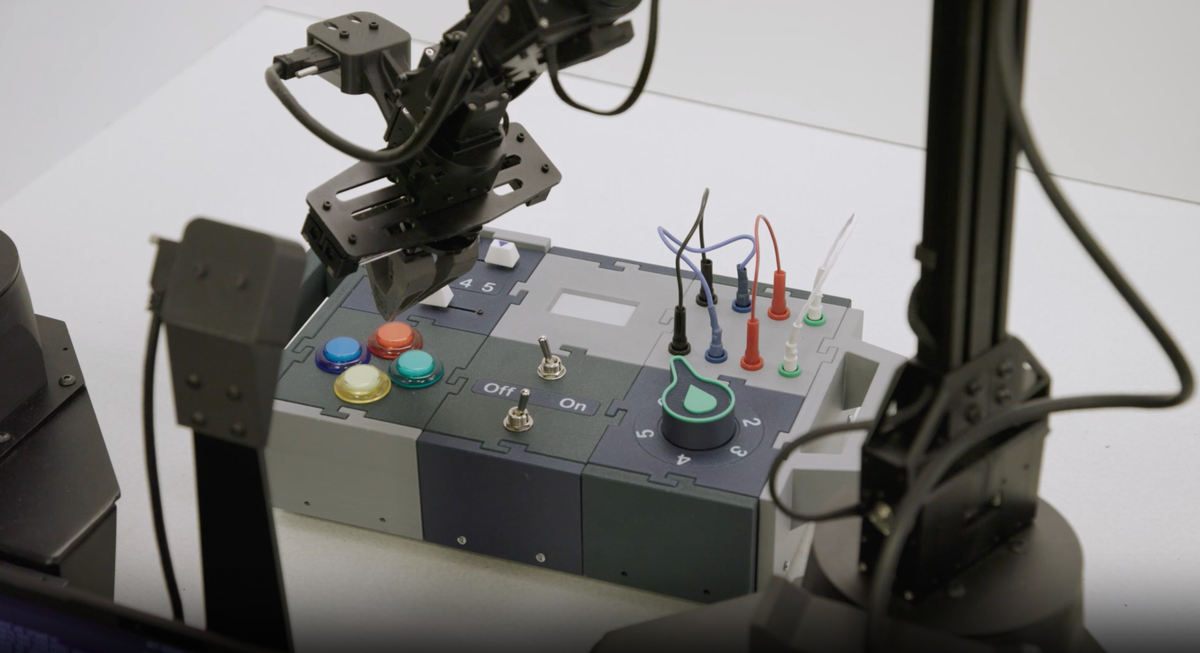

Microsoft has introduced Rho-alpha, a vision-language-action model that lets robots follow spoken or written instructions like “Insert the plug” or “Turn the knob to position 5.” It combines visual input with a sense of touch to guide actions, and is built to handle changing environments and respond to human input in real time. Rho-alpha builds on standard VLA systems by adding tactile sensing and the ability to learn from feedback during use.

The model is trained using a mix of physical robot demonstrations and large-scale simulation. It combines real-world movement data with visual question answering datasets from the web. Simulated training is done using NVIDIA’s Isaac Sim on Azure, which allows Microsoft to scale reinforcement learning for tasks where collecting physical data, especially for touch, is slow or impractical.

Rho-alpha is currently being tested on dual-arm and humanoid robots while Microsoft refines its training pipeline and system performance. It also supports human corrections through simple teleoperation tools, so the robot can adjust its behavior as it works. Microsoft is offering early access for teams that want to test the model on their own robots and with their own data.

🌀 Tom’s Take:

VLA models aim to unify how robots see, understand, and act, and Rho-alpha is a concrete step in that direction. Microsoft is pushing the field forward by integrating touch and real-time learning into a single, adaptable system.

Source: Microsoft Research