Intrinsic Introduces Vision Model That Sets New Benchmarks in Robotic Perception

- Intrinsic introduced the Intrinsic Vision Model, a new system that helps robots handle real-world factory conditions with greater precision.

- Developers can apply to use the model through the new AI for Industry Challenge, focused on solving electronics assembly tasks.

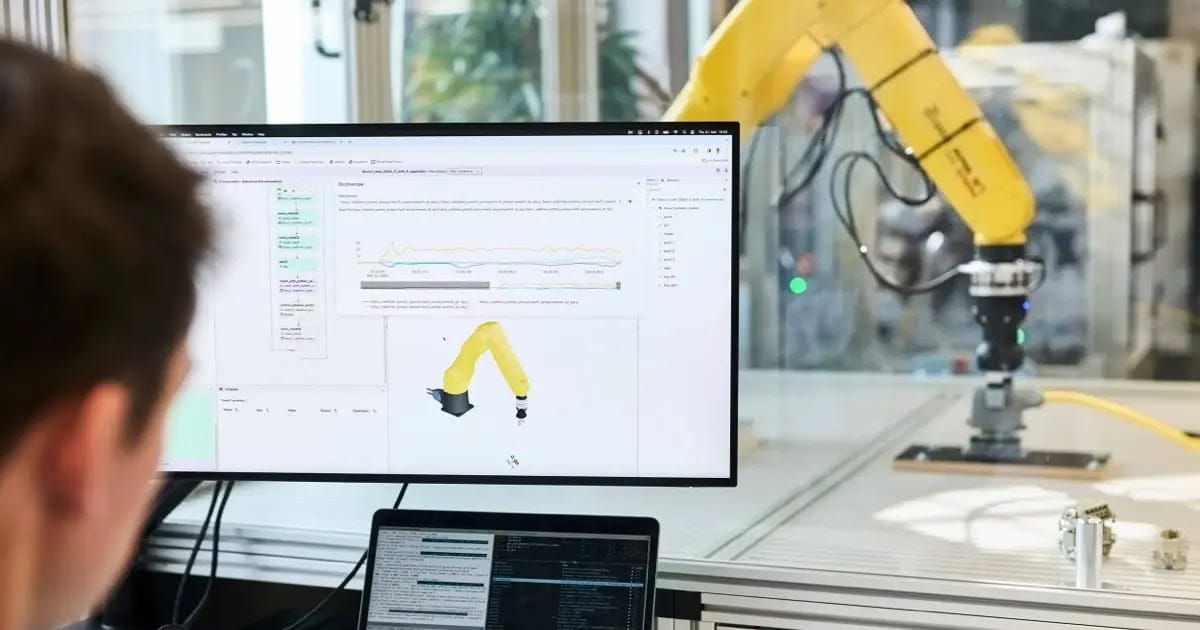

Intrinsic, an AI robotics company at Alphabet, has introduced a new system that helps robots see and understand their surroundings. The Intrinsic Vision Model (IVM), showed strong performance across different types of objects, ranking first in seven out of eleven tests at ICCV 2025. IVM is being built for use in real factories, where robots need to deal with changing parts, materials, and lighting without losing accuracy.

IVM is CAD-native and trained for multi-view, pixel-level reasoning, eliminating the need for application-specific training or expensive depth cameras. It supports tasks like pose detection, segmentation, and tracking using only standard RGB hardware. These capabilities unlock high-mix, low-volume applications, where robots must adapt on the fly to new parts and conditions, especially in operations like cable insertion or handling reflective materials.

Intrinsic is also launching the “AI for Industry Challenge” with Open Robotics. The challenge gives developers a chance to use open-source simulation tools to train models for electronics assembly tasks. The top teams will get access to the Intrinsic Vision Model and Flowstate, Intrinsic’s developer platform, to test their work on real robots. The goal is to give more developers hands-on experience building solutions for real-world manufacturing problems.

🌀 Tom’s Take:

A perception model that’s accurate, affordable, and ready out of the box is a big deal. It pushes robotics closer to plug-and-play for high-mix applications, accelerating adoption.

Source: Intrinsic