Google Introduces On-Device Gemini Model for Real-Time Robot Tasks

- Google’s new Gemini Robotics On-Device model runs directly on robots, handling tasks like folding clothes or zipping bags.

- A developer toolkit, available through a trusted tester program, allows quick adaptation to new tasks with just 50–100 examples.

Google has introduced Gemini Robotics On-Device, a new version of its multimodal robotics AI designed to operate directly on robotic hardware. Based on the Gemini Robotics system launched in March, this on-device model eliminates the need for a network connection, offering low-latency control and higher reliability in the field.

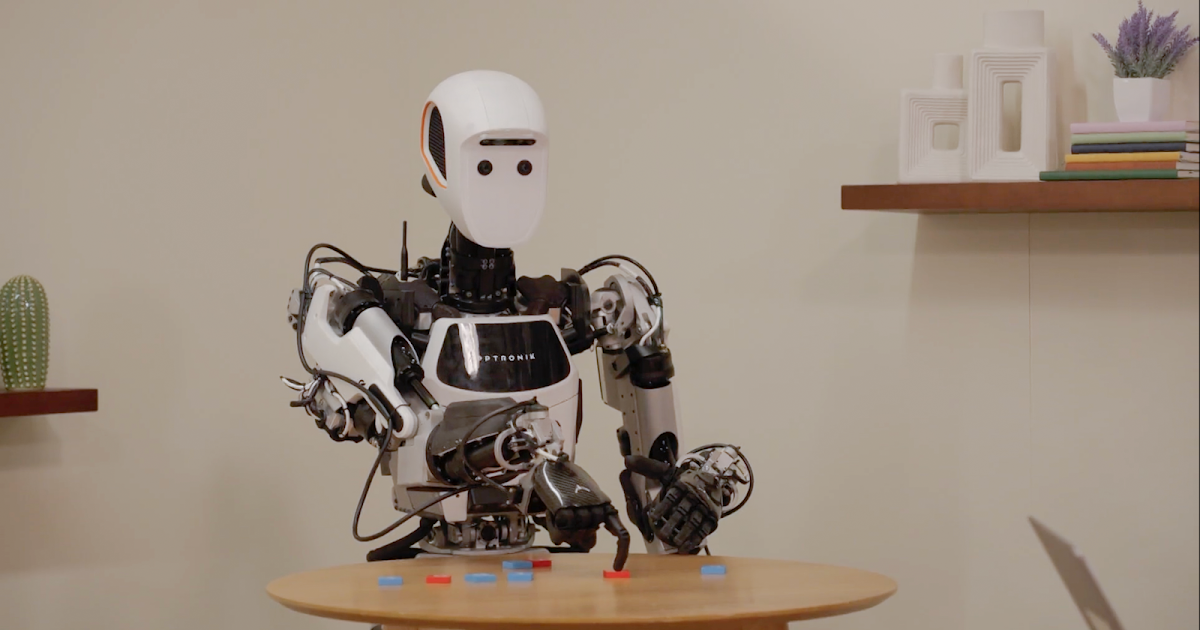

The model is built as a foundation system for bi-arm robots and handles various physical tasks, from manipulating objects and folding clothes to following multi-step instructions. In benchmark evaluations, it outperforms earlier on-device models in generalization and instruction-following. It also adapts to different robot types, including the ALOHA lab system, the Franka FR3 dual-arm platform, and Apptronik’s Apollo humanoid.

To support adoption, Google is releasing a developer toolkit that enables rapid fine-tuning with as few as 50 to 100 demonstrations. The system includes safety components reviewed by internal teams, and initial access to the model and SDK is available through a trusted tester program.

🌀 Tom’s Take:

On-device generalized models are gaining momentum in robotics. Alongside Google’s Gemini Robotics On-Device, 1X recently announced its Redwood model, designed to run entirely onboard. It handles various tasks, from folding clothes to opening doors, without relying on the cloud. Local processing reduces latency and boosts reliability, both essential for safe adaptation in real-world environments.

Source: Google DeepMind