GM Reveals Hands-Free Driving, AI Assistant, and NVIDIA-Powered Vehicle Platform

- GM will launch hands-free highway driving in 2028, starting with the Cadillac ESCALADE IQ.

- A new NVIDIA Thor-powered system will run the car’s main functions and allow for faster updates and smarter features.

At its GM Forward event in New York, the company announced new features powered by AI, autonomy, and robotics. GM is shifting focus toward making vehicles smarter, safer, and more connected. The updates include driverless highway features, voice assistants, factory robots, and a new system to run all core vehicle functions.

Eyes-off driving is set to begin in 2028, starting with highway use in the Cadillac ESCALADE IQ. A turquoise light on the dashboard and mirrors will show when the system is active, letting the driver know they can take their hands off the wheel. The system uses lidar, radar, and cameras for a full picture of the road. These sensors feed into a combined model trained on real-world driving and tested in simulated emergency scenarios. GM built this on its experience with Super Cruise, which has been used for over 700 million miles, and on technology from Cruise, its driverless car subsidiary. Cruise brings its multimodal perception systems, simulation framework, and training data behind five million miles of fully driverless driving.

Starting in 2026, GM vehicles will include voice features powered by Google Gemini. Drivers will be able to talk to the car to get help with directions, messages, or trip planning. Later, GM will add its own assistant through OnStar that uses data from the vehicle to give updates about things like maintenance or places to stop along the way.

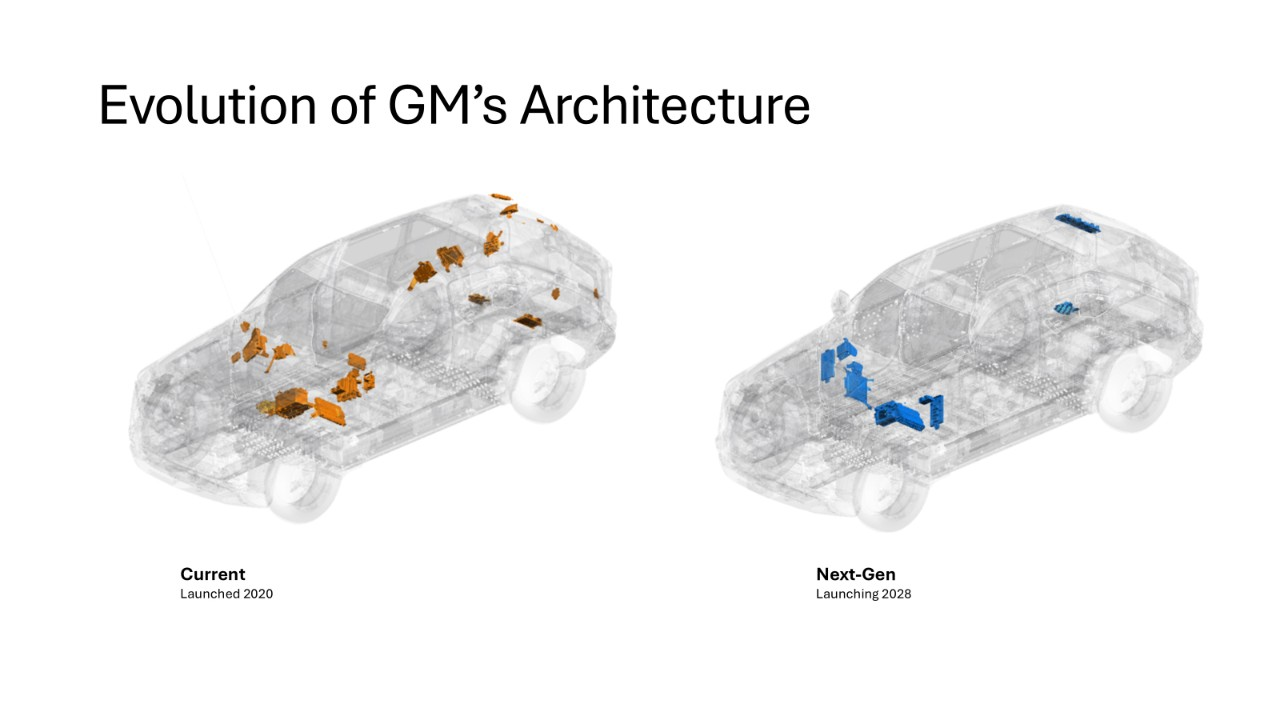

All of this will run on GM’s next-generation computing platform, launching in 2028 and powered by NVIDIA Thor. It connects key systems, like propulsion, steering, braking, infotainment, and safety, through a high-speed Ethernet backbone and central processor. The architecture is built for cars that learn and improve throughout their lifetime. It delivers real-time intelligence to support faster responses and more advanced driver-assist features. And it brings the efficiency of the digital world into the physical one by making it easier to update, scale, and improve every part of the vehicle over time.

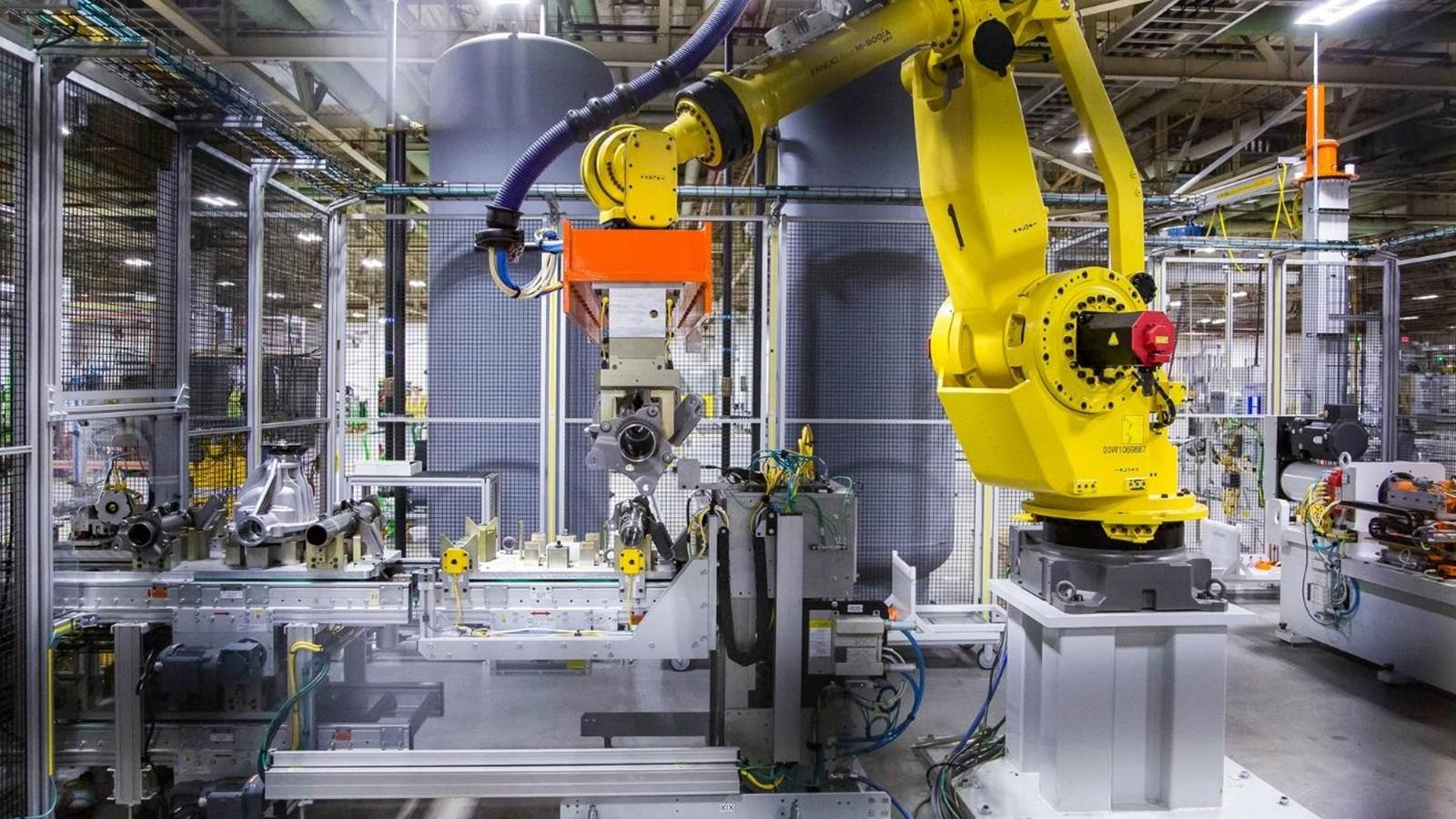

GM also shared new details about its robotics efforts, led by more than 100 engineers across its Autonomous Robotics Center in Michigan and a sister lab in California. These teams are developing advanced collaborative robots, or “cobots,” trained on decades of GM factory data, such as sensor feeds, quality metrics, and telemetry from thousands of machines. GM is developing factory robots that use production data to get better over time. These cobots are now being rolled out in U.S. assembly plants to help with tasks, improve safety, and support workers. GM is also building the software and parts needed to run these systems on the factory floor.

🌀 Tom’s Take:

These updates signal that GM is going all-in on embodied intelligence and physical AI, evolving its vehicles to meet the demands of a smarter, more adaptive era.

Source: General Motors