Galbot Unveils AI Models for Dexterity and Navigation in Real-World Robotics

- DexNDM gives robots better control for handling objects, allowing them to turn, adjust, and assemble items with accuracy across different shapes and positions.

- NavFoM helps robots move through unfamiliar spaces and follow spoken instructions without retraining, using data from 8 million navigation examples.

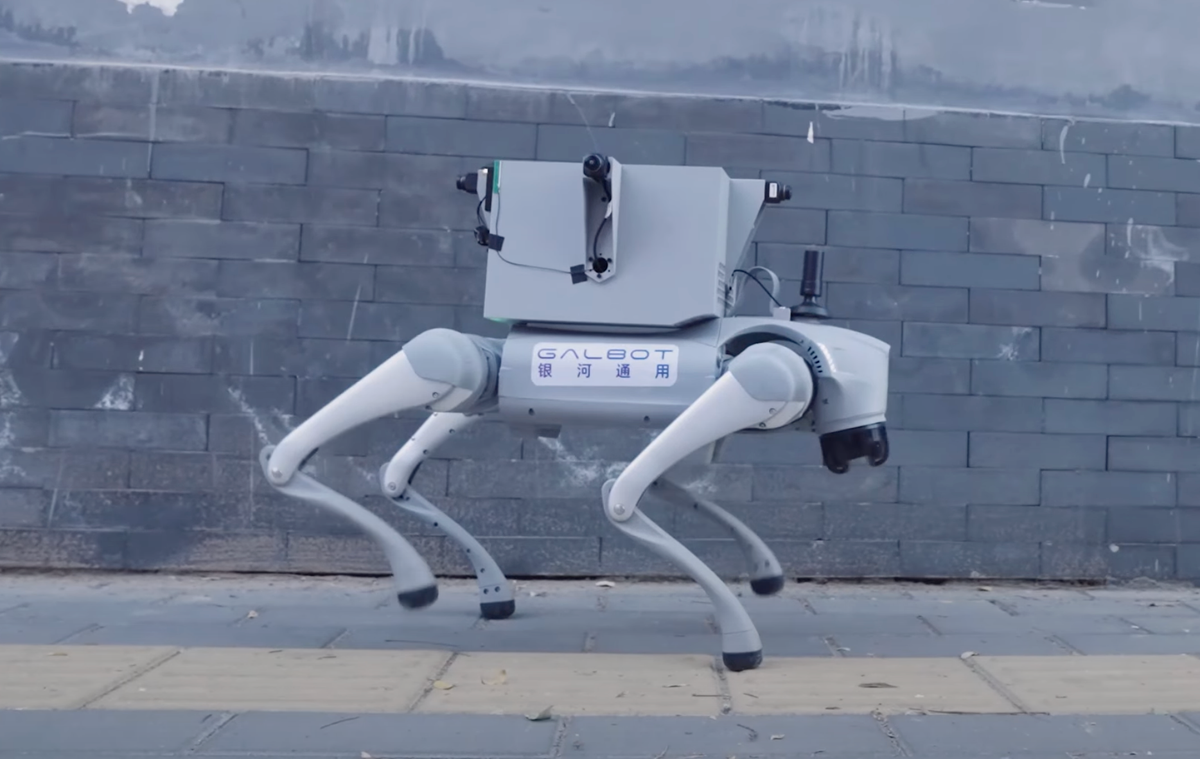

Galbot has launched two new AI models designed to expand what robots can do in the real world. DexNDM is a neural dynamics model that improves how robots handle physical objects with precise, flexible control. NavFoM is a foundational model that allows robots to navigate unfamiliar environments and follow instructions without additional training. Developed in collaboration with Tsinghua University, Peking University, University of Adelaide, and Zhejiang University, these models aim to move robotics closer to systems that can take on a wide range of tasks across different settings and robot types.

DexNDM (Dexterous Hand Neural Dynamics Model) is designed to help robots perform tasks that require precise hand control, such as turning, adjusting, or inserting objects. It can handle a wide range of item types, from small electronics to long tools, and rotate and adjust objects no matter how the robot’s hand is positioned.

Source: YouTube / Galbot

The model is trained on individual tasks and object types, then combines what it learns into a single system that can be used across different jobs and robots. Galbot has also built DexNDM into a teleoperation system where a human operator gives simple, high-level commands, and the robot handles the detailed hand movements on its own. This allows tasks like tool use or assembly to be done more efficiently, combining human judgment with robotic precision.

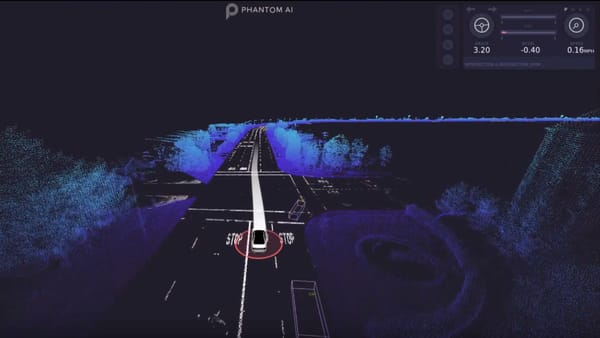

NavFoM (Navigation Foundation Model) designed to give robots more flexibility in how they move through the world. Most navigation systems are limited to specific tasks or environments, but NavFoM works across both indoor and outdoor settings without needing extra training or pre-mapped data.

Source: YouTube / Galbot

Trained on 8 million examples, it can follow people, respond to verbal instructions, search for objects, or navigate traffic. It also works across different robot types, including drones, quadrupeds, and vehicles, which makes it easier to deploy at scale. By processing both visual input and user commands in real time, NavFoM helps robots move through complex spaces like warehouses, public areas, or unfamiliar terrain with minimal setup.

🌀 Tom’s Take:

Generalization is a major unlock for robotics. Galbot's models show how robotics is shifting from narrow, task-specific systems to flexible tools that can adapt across jobs, settings, and hardware.

Source: PR Newswire / Galbot