Archetype Unveils “Lenses” as Interpretive Layer for Physical AI

- Archetype AI introduces “Lenses” as a new framework for converting sensor data into insights using its Newton model.

- The company positions Lenses as continuously operating tools for human empowerment, rather than task-automating agents.

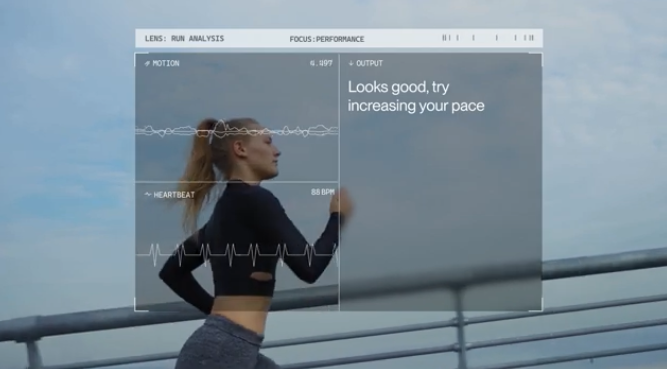

Archetype AI has introduced Lenses, a new kind of AI application built to interpret sensor data from the physical world. Instead of automating tasks, Lenses help people understand what’s happening around them by turning raw data into structured insights. Powered by its Newton model, each Lens is designed for a specific need and runs continuously. Archetype’s goal is to make Physical AI easier to use and more useful, with the broader aim of using AI to strengthen human understanding of the world.

Unlike traditional AI agents, Lenses don't act autonomously. They continuously process real-time sensor inputs to help users make more informed decisions. Each Lens can be configured with simple, natural-language instructions and adjusted on the fly using a feature called Focus. Depending on the context, a Lens can analyze historical data, monitor current conditions, or anticipate future trends.

Examples range from traffic incident detection to predictive maintenance and performance monitoring. Archetype positions Lenses as a shift from agent-based delegation to interpretation-based empowerment, aligning with their broader mission to enhance rather than replace human decision-making.

Source: YouTube/Archetype

🌀Tom's Take:

At the heart of spatial computing is giving machines perception by enabling them to sense and interpret the world. Archetype’s Semantic Lenses sit squarely at that intersection, providing the interpretive layer on which smarter AI agents will depend.

Source: Archetype AI Blog