AI Wayfinding Companion Project Shows the Power of Spatial Intelligence

- AI Wayfinding Companion is a pendant camera that uses VPS, multimodal AI, and voice to deliver environment-aware navigation and assistance.

- Built in a 5-day hackathon by 3 UX engineers at Niantic Spatial, the device shows how wearable cameras can unlock new use cases for AI.

Wearable cameras are becoming the eyes of AI, giving it a view of the world so it can be smarter, more contextual, and deliver more meaningful results. That’s exactly what’s on display in a wearable project from a team of UX engineers at Niantic Spatial.

The wearable pendant, called AI Wayfinding Companion, was created by a team of three, Ian Curtis, Emma McMillin, and Jim O'Donnell, as part of a 5-day hackathon held at Niantic Spatial. It's meant to be worn as a specialized companion for navigation and environmental understanding.

Source: YouTube / Ian Curtis

Curtis told Remix Reality that the team selected a pendant design as they wanted something screen-free, hands-free, and socially approachable. The necklace form factor also allows the camera to sit at chest height to give it a more natural perspective.

The device was built using an ESP32-CAM, a low-cost microcontroller with Wi-Fi, Bluetooth, and an integrated camera. A microphone was added, and the components were encased in a 3D-printed shell designed as a pendant. The device connects to a smartphone web app that the team built, and the processing of the data happens in the cloud.

The AI brain behind the device used a number of systems, including Niantic Spatial's visual positioning system (VPS) for localization, Google DeepMind (Gemini) for real-time visual analysis, and ElevenLabs for natural voice responses. The team used bolt.new for generating app logic and to write the majority of the code, and Supabase for edge function routing.

Niantic Spatial, a geospatial AI company spun out from Niantic Labs, has a powerful VPS, which is critical to powering the wearable solution. It can pinpoint location in crowded cities, indoors, or places where GPS struggles, enabling accurate, environment-aware guidance. When combined with AI for real-world understanding, it unlocks a number of use cases for the pendant. This includes navigating unfamiliar cities or transit hubs, delivering packages to the right door, assisting people with visual impairments, and asking questions to learn more about your surroundings.

Safety and privacy were top priorities for the team. They designed the device to be minimal and compact so it feels like an accessory, not a camera in your face. Curtis said they chose to “celebrate” the device by making it brighter, customizable, and visually intentional, so it feels friendly rather than secretive. He added that the team explored other privacy-focused features, including using GPT-OSS (OpenAI’s open-weight models) for on-device LLM processing to keep conversations and visual data private, reducing the camera’s size, and adding tools like real-time face blurring and other built-in safeguards.

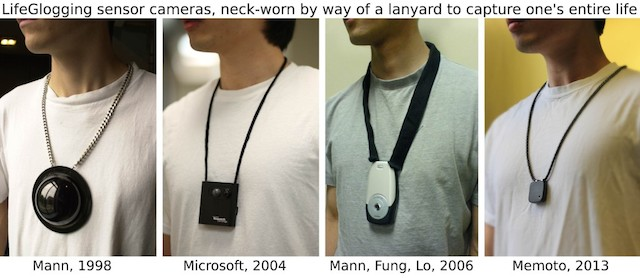

We’ve seen wearable cameras before, like the Narrative Clip from 2014, and most recently, the Humane AI Pin. The concept goes back even further to 1988, when Steve Mann, often called the father of wearable computing, built a camera with a fisheye lens and sensors to “LifeGlog” — a log that required no conscious thought or effort to create.

We’ve also seen wearable pendants with microphones that listen to the world, like Friend and Pendant. It's not surprising that these devices are coming back because multimodal AI can finally make full use of the cameras, mics, and sensors they carry. This is why the rumored first product from OpenAI is expected to be a companion device packed with sensors, including a camera.

Curtis and his team show where AI is headed. When real-world sensors meet spatial intelligence, AI stops being a tool that reacts and starts becoming one that understands. This combination is the catalyst for AI’s next leap, enabling systems that can see, locate, and interpret the world in real time. And because AI is now building the tools as well as powering them, the path from idea to working product has never been faster.

From the comments on Curtis’s social media posts, there’s clear interest and enthusiasm for the device. In response to one eager commenter who said they couldn’t wait to get one, Curtis noted, “This really was a big push to get this done for the hackathon. Then taking a breath, digesting everything we have created so far. Also very open to feedback from the community!”

Disclosure: Tom Emrich has previously worked with or holds interests in companies mentioned. His commentary is based solely on public information and reflects his personal views.