1X NEO Uses Generated Video to Act in the Real World

- 1XWM uses video generation to predict how a task should unfold, then extracts robot actions directly from the video.

- The system runs zero-shot on NEO, completing novel tasks without prior robot training data.

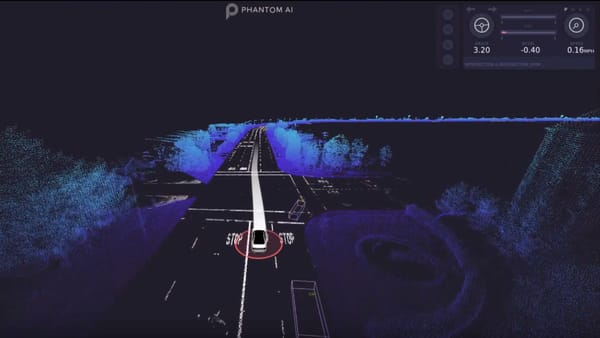

1X has introduced 1XWM, a world model that enables robots to take real-world actions by generating video predictions. Unlike typical robot systems that rely on large volumes of robot-specific training data, 1XWM learns from internet-scale human video. Given a prompt and a starting image, it predicts how a task should unfold by generating a short video, then converts that into a step-by-step action sequence for the robot to perform.

Source: YouTube / 1X

This approach, known as a video-pretrained world model policy, allows 1XWM to reason about physical dynamics rather than just recognizing objects or scenes. The generated video captures spatial, kinematic, and physical cues that match what NEO, 1X’s humanoid robot, is capable of doing, thanks to its human-like form and hardware design. A second system, the Inverse Dynamics Model, then translates the video into executable motor commands that are physically valid for the robot.

This design lets 1XWM handle a wide range of tasks without being trained on robot-specific examples. According to the company, NEO has completed new tasks in zero-shot settings, like using both hands or interacting with unfamiliar objects, by relying only on patterns learned from human video.

🌀 Tom’s Take:

1XWM is a video-pretrained world model used for control. It imagines how a task will unfold and then executes that on a real robot. This goes beyond simulation to turn video prediction directly into action.

Source: 1X